Ruyi Ding

Assistant Professor, Division of Electrical and Computer Engineering, Louisiana State University (LSU)

Previously: PhD, Northeastern University

Research Interests: AI Security, Hardware Security, Side-Channel Analysis

“如意”在中文中寓意“顺遂心意”,象征着对美好未来的追求与坚定信念。

In Chinese, 'Ruyi' (如意) conveys the meaning of 'fulfilling one's aspirations,' representing the pursuit of a prosperous future and steadfast resolve.

About Me

I am an Assistant Professor at the Division of Electrical and Computer Engineering at Louisiana State University. My research focuses on AI security and hardware security, specializing in neural network robustness, privacy preservation, and side-channel analysis.

I am actively looking for Ph.D. students for Spring 2026 and Fall 2026. Feel free to shoot me an email if you are interested in AI security and hardware security!

Featured Research Projects

News

- [2025-08] One paper is accepted in CCS 2025 . See you in Taipei, China!

- [2025-05] Invited to serve as a TPC member for ICCAD 2025

- [2025-04] Received HOST 2025 Travel Grant. Thank you, HOST!

- [2025-04] Received DAC Young Fellow. Thank you, DAC!

- [2025-03] Received Northeastern 2025 Outstanding PhD Student Research Award. Thank you, Northeastern!

Research Interests

- AI Security: Exploring machine learning security and privacy issue during training, inference and deployment.

- Hardware Security: Security and Privacy of embedding DNNs.

- Side-channel Analysis: Power/EM side-channel anaylsis and micro-architecture SCA.

- Data Analysis: Traffic data analysis and event detection.

Publications

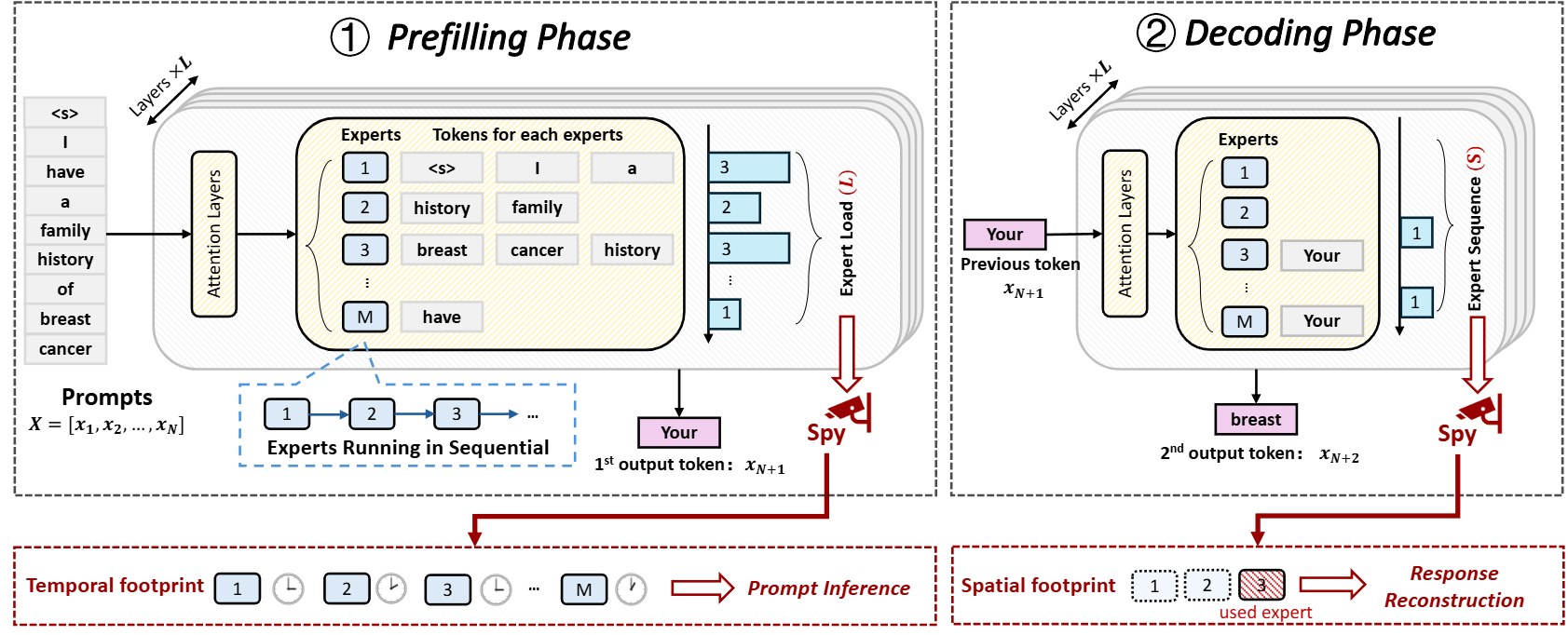

- CCS 2025. MoEcho: Exploiting Side-Channel Attacks to Compromise User Privacy in Mixture-of-Experts LLMs PDF

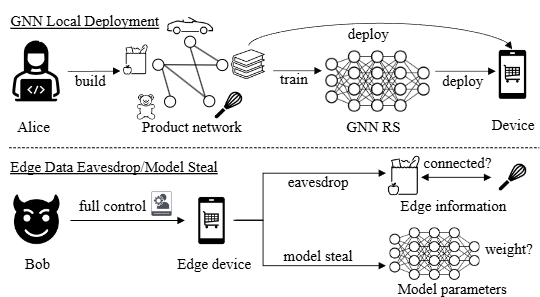

- DAC 2025. Graph in the Vault: Protecting Edge GNN Inference with TEE. PDF

- HOST 2025. MACPruning: Dynamic Operation Pruning to Mitigate Side-Channel DNN Model Extraction. PDF

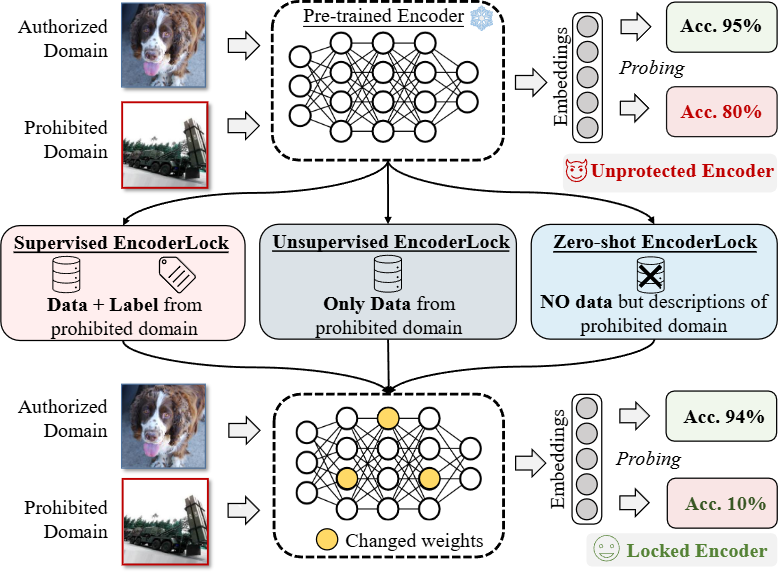

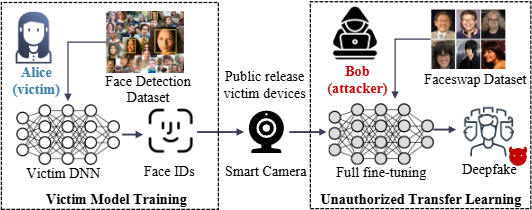

- NDSS 2025. Probe-Me-Not: Protecting Pre-trained Encoders from Malicious Probing. PDF

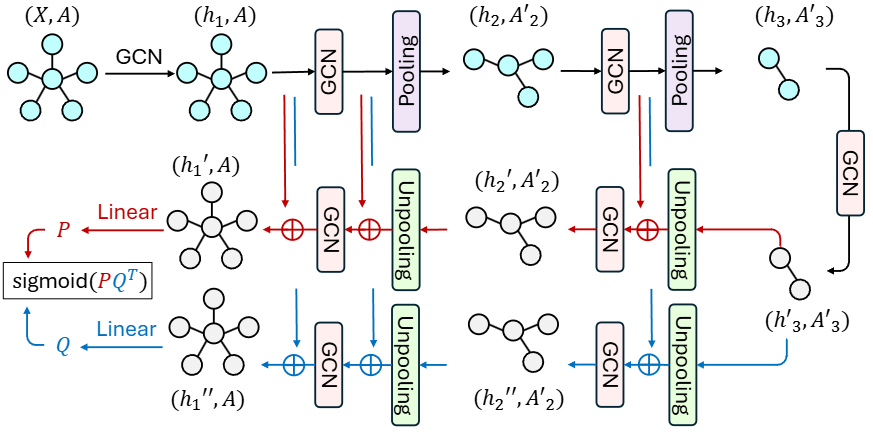

- NeurIPS 2024. GraphCroc: Cross-Correlation Autoencoder for Graph Structural Reconstruction. PDF

- ECCV 2024. Non-transferable Pruning for Controlled Model Reuse. PDF

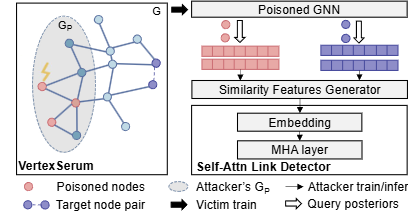

- ICCV 2023. VertexSerum: Poisoning Graph Neural Networks for Link Inference. PDF

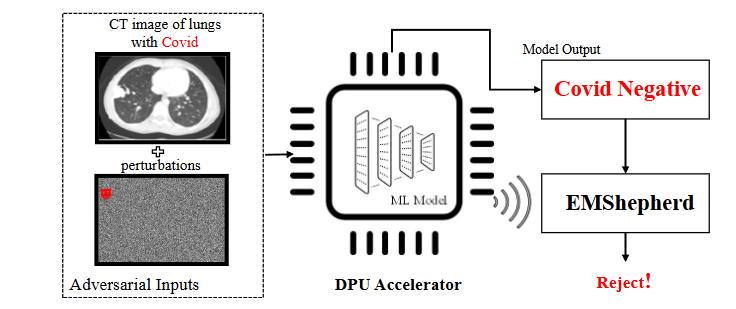

- ASIACCS 2023. EMShepherd: Detecting Adversarial Samples via Side-channel Leakage. PDF

For a complete list, please see my Google Scholar.

Teaching

Fall 2025

EE 4745 - Neural Computing Louisiana State University

| Office Hours: M 3-4pm | Location: PFT Hall 1212 |

Contact

Feel free to reach out to me at ruyiding[at]lsu[dot]edu or connect with me on LinkedIn.